Introduction

Whether running a physical or online business, it's always wise to start small and scale gradually. This principle also applies to building applications and software.

Start Small, Scale Big - Unknown

It has low risk in terms of the amount of time and money spent on it and also you have something to start with. If something goes wrong, the amount of time and money wasted will be small and you can re-design the solution with minimal effort.

Real-world example

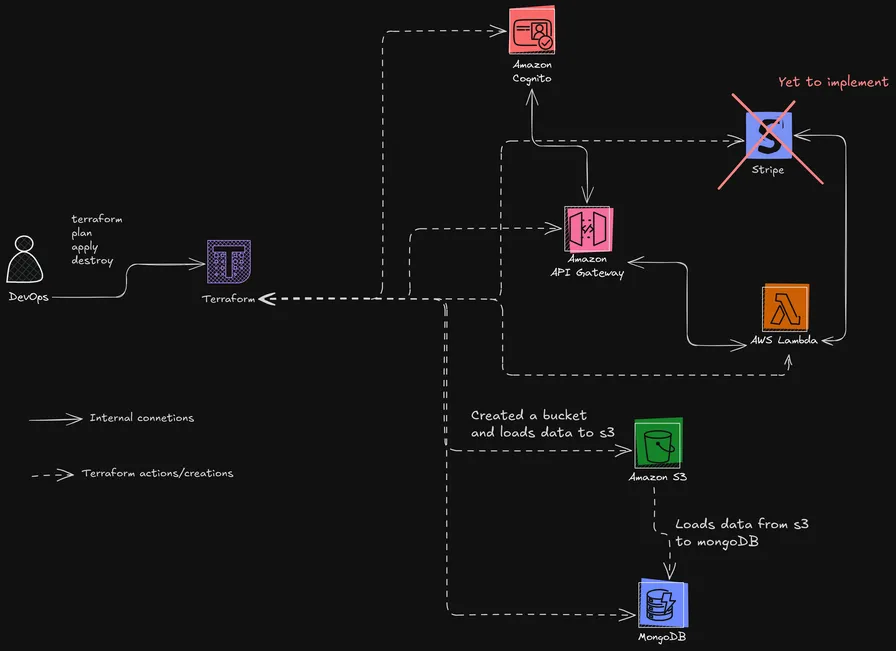

Let's say you want to build a multi-tenant cloud application that serves API as a service. You have a few basic components:

- Cognito for user management

- Lambda functions for running and processing data

- API gateway for serving the data that is processed

- MongoDB for data storage

- S3 for file storage

- Secret manager for storing sensitive information

- Stripe as a payment gateway

- IAM roles policies for all the above resources.

- You might need to make this cloud-agnostic

And we might also need to include other services which we might not need/know at the beginning.

Day n, n+1, n+2, n+n..

On day 1, Let’s say a developer sets up Cognito, changes a few configurations on AWS, and also creates a MongoDB cluster, Creates a database, creates a collection loads it with some dummy data to get started, and creates a database of users with few rules.

The next day n+1, the developer added a few lambda services with environment variables, configs, etc, and creates API gateway which is configured to a specific cognito user pool.

This keeps on growing and you will be calling the application by adding more infra’s to it. At n day, you will have a larger infra up and running.

When you start adding different components, at one point in time:

- Your infra grows and you lose track of what is running on which location.

- Starts to consume more resources ending up in more billing prices every day.

- You need to manually delete the instances you don’t want/when not in use and again need to configure them when you need.

- You probably will forget to delete and configure on the network which might cost you some amount every month.

- More than 2 resources are needed to set the infra on the cloud for development purposes manually which has an impact on money and time.

- The developer needs to manage this on one or more cloud platforms.

IaC can address these complications.

What

IaC - Infrastructure as Code.

Imagine a script written to find the LCM of a given number(up to 3). You would probably write something like

import math #import section

def lcm(a, b): #function does n

return abs(a * b) // math.gcd(a, b)

def lcm_of_three_numbers(x, y, z): #another function

return lcm(lcm(x, y), z)

# Example usage

num1 = 12 #variables

num2 = 15

num3 = 20

result = lcm_of_three_numbers(num1, num2, num3) #call the function

print(f"LCM of {num1}, {num2}, and {num3} is: {result}") #output

which has various sections like imports, functions, variables, and output etc., You can give the same code to someone who doesn't know how to find LCM for 3 numbers and they will be able to execute it and see the result.

Here, we use code for processing data(calculation of integers). Likewise, in IaC you can write scripts using a language. Instead of processing, and solving something, terraform actually builds an infrastructure for you.

What is infrastructure?

If you are going to cook, you will need gas, stove, and utensils for the dish you are going to make. Likewise, if you are going to run a code/software/app, you need a machine, OS, and packages. For the above Python code the infra looks something like

| Machine processor | 2 |

|---|---|

| RAM | 512MB |

| Storage | 512MB |

| OS | Linux/windows |

| Interpreter | Python>3.xx |

| Package | math |

| Source | lcm.py |

If you ask is it easy to setup an infra manually for the above code to be executed? Yes.

But for an complex infra? No, the developer has to keep track of infra and set it up one by one. And if we want more than one infra for development(integration, development, QA, and UAT) it will consume more time and lead to human errors.

How

To address this, several cloud providers came up with CLIs, SDK’s, CDK’s, and APIs to interact with their services. For example, to create a S3 bucket on AWS, we can use the CLI command:

aws s3api create-bucket --bucket my-bucket-name --region us-east-1

same CLI command to create blob on Azure:

az group create --name myResourceGroup --location eastus

Cli to create VMware instance

govc vm.create -m 4096 -c 2 -g "otherGuest64" -ds datastore1 -net VM_Network -folder /myVms -pool /Resources -on=false my-vm

The solution above works fine but again:

- The structure and syntax vary from provider to provider.

- There is no way to track which provider has deployed what resources

- Need to write extra script to check the status of deployments

- Maintaining modularity becomes hard.

- No version control

- No cross-provider management

- No preview, build-in rollback mechanism

To address these problems and to give developers more control and flexibility, IaC is the solution

Terraform

Terraform - an open-source leading IaC tool provider that uses HCL language (Hashicorp Configuration Language). It has support for various providers. you can view the complete list of providers in the registry page. Let us see how terraform works and how it can be used across multiple providers.

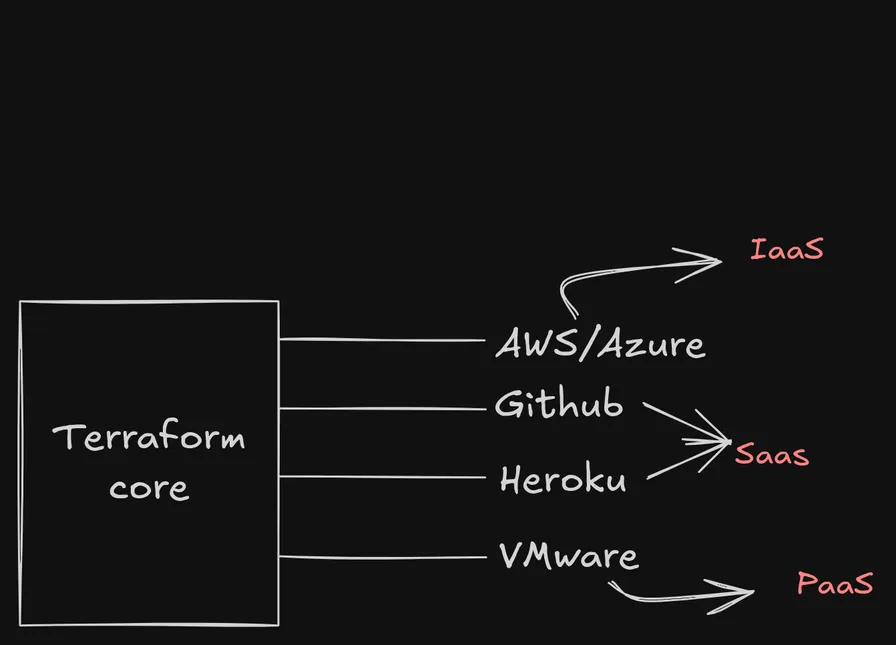

Does Terraform have CLI/API to interact with all the providers? - No

To know how terraform interacts with multiple providers like AWS, Azure, GCP and so on, we will look into the core.

Terraform Core

Imagine that you need to talk with 10 different people. each one of them understands a different language. Now there are two options here

- Learn all 10 languages and interact with them. Which might be very hard and time-consuming

- Have 1-2 translators who knows most of the languages and use them to interact with other 10 person.

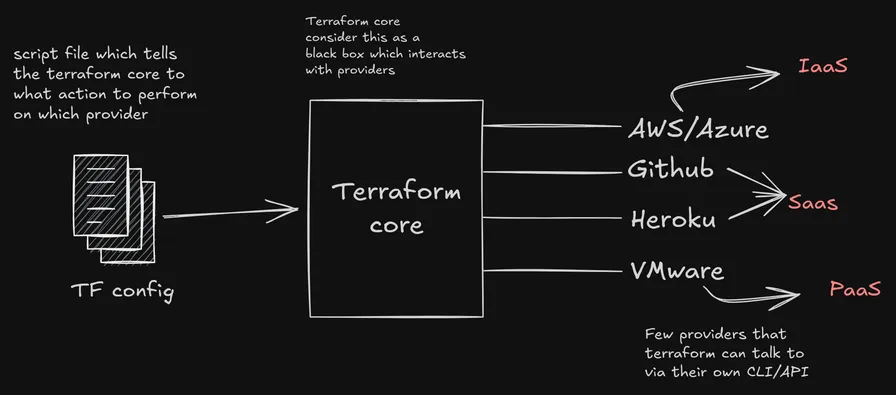

Terraform is same as the option 2. Cloud providers like AWS, Azure, GCP, Mongo Atlas, VMware has their own CLI/API interfaces to interact with them. Instead of writing custom CLI/API’s to all these providers, terraform core has the ability to utilize these pre-available CLIs and API’s to interact with the providers

Terraform core is the core SDK that can interact with mostly known and used cloud providers. To interact with this core, we need something called as TF config file. What is this file? How to build it? Lets dive deep into it.

Before we dive into configurations, let's review a few key questions and answers to better understand the process.

Qna’s

What are providers?

Providers can be someone who provides services to the end users. Here AWS,Azure,GCP, Github are few providers. When talking about providers in Terraform, it means that Terraform Core supports these providers to be used along with Terraform.

Does Terraform only support cloud providers?

No, Terraform also supports local providers like VMware . Terraform can interact with local SDK’s via CLI/API to create infra

What does Terraofrm Core consists?

it consists of logic written in Go lang to interact with several providers. Consider it as a black box and we don't need to know about its core logic to use Terraform.

Ok. Now back to TF configs

TF config

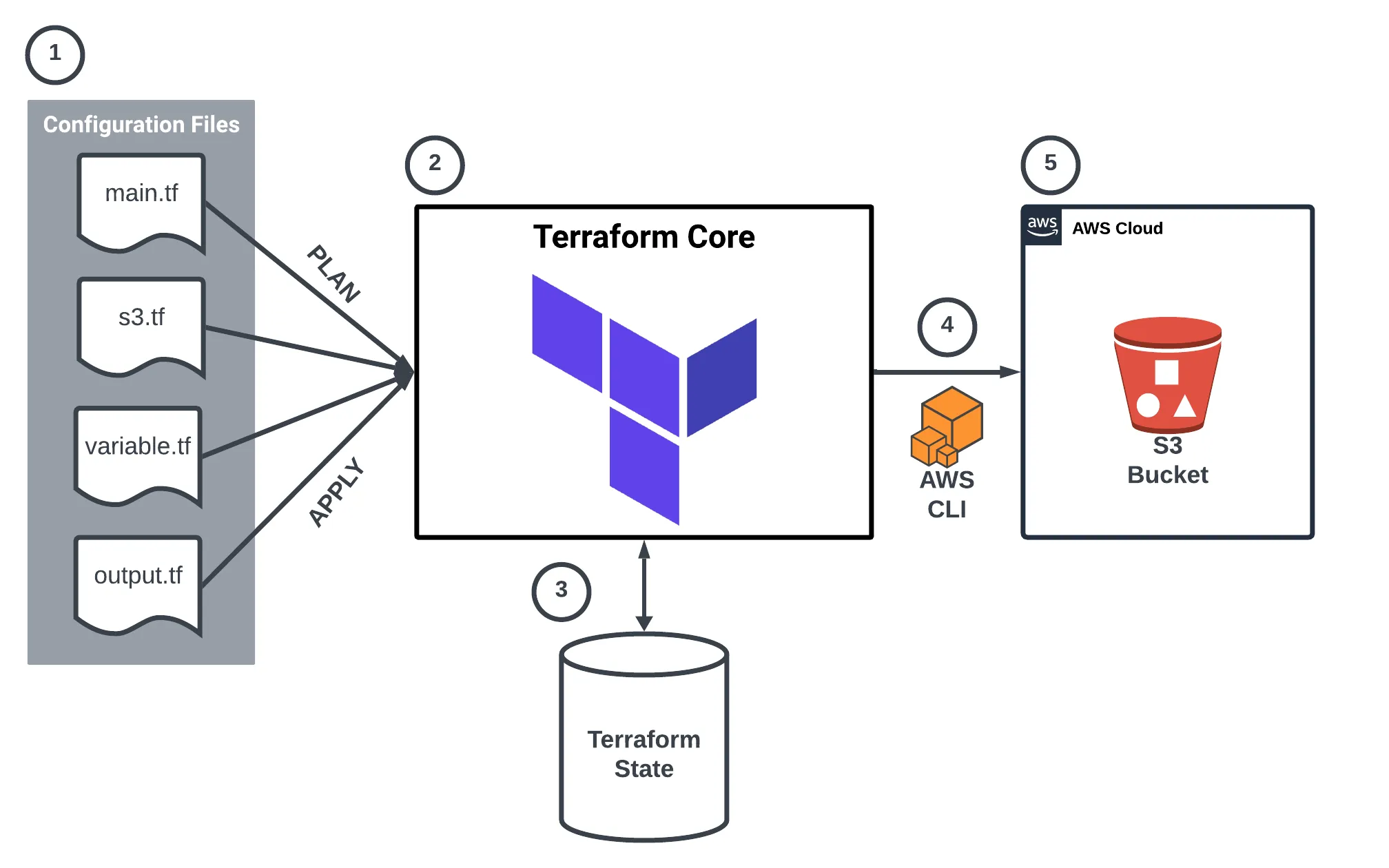

We know that scripts can be written (IaC) using HCL. These files are stored in a format as .tf (how python and javascript has formats like .py and .js). the file ending with .tf is known as TF config file. We can look into all tf configs files

main.tf

As the name implies, this is the main config file and entry point when an terraform script is executed. A main file basically consists of 2 sections:

- provider - terraform which we are going to set the infra on. ex. AWS, Azure

- resource - resource on the given provider. Ex. lambda, EC2 are resources provided by AWS

# Specify the AWS provider

provider "aws" {

region = "us-east-1" # Specify your AWS region

}

# Create an S3 bucket

resource "aws_s3_bucket" "my_bucket" {

bucket = "my-unique-simple-bucket-name" # Make sure this is a unique name

}

the above tf config represents a script with AWS as a provider and s3 as the resource to be created. This is a basic tf config file and it can be further structured and made more useful with passing variables and getting the actual output from the script

# Specify the AWS provider

provider "aws" {

region = "us-east-1" # Specify your AWS region

}

# Define a variable for the S3 bucket name directly in the main.tf

variable "bucket_name" {

description = "The name of the S3 bucket"

type = string

}

# Create an S3 bucket using the bucket_name variable

resource "aws_s3_bucket" "my_bucket" {

bucket = var.bucket_name # Reference the variable for bucket name

}

# Output the bucket name after creation

output "bucket_name" {

value = aws_s3_bucket.my_bucket.bucket

description = "The name of the S3 bucket created"

}

In the above code block, you can see additional blocks:

- variable

- output

These can be used to dynamically pass variables and output gives a nice structured output of the execution. If you remember, we spoke about modularity. we can have separate files called variables.tf and output.tf

variable "bucket_name" {

description = "The name of the S3 bucket"

type = string

}

output "bucket_name" {

value = aws_s3_bucket.my_bucket.bucket

description = "The name of the S3 bucket created"

}

Note that all tf files are tf configuration files.

Modules

A Module is a container for multiple resources that can be reused across different configurations. Modules help in organizing and abstracting infrastructure components.

Example:

module "network" {

source = "./network"

cidr = "10.0.0.0/16"

}

State Management

Terraform tracks infrastructure using a State File. This file maps Terraform resources to their corresponding real-world infrastructure. It is stored locally or in remote backends like S3 for collaboration.

Now let us see how to apply these infra changes on providers.

Remote Procedure Call

A remote procedure call (RPC) is a protocol that allows a computer program to run a function or procedure on a different computer or server, without the programmer needing to explicitly code the communication details

Before proceeding, read through the installation step:

https://developer.hashicorp.com/terraform/tutorials/aws-get-started/install-cli

and you will need to install CLI for the provider that you are going to use. All the following RPC are done within the root folder of main.tf file

terraform init

Purpose:

This command is used to initialize a working directory containing Terraform configuration files. It sets up the required backend, providers, and modules so that Terraform can manage your infrastructure.

Key Steps:

- Downloads necessary provider plugins (e.g., AWS, Azure, Google Cloud).

- Initializes the backend (where Terraform state files are stored).

- Prepares the working directory for other Terraform commands (e.g. plan and apply)

terraform init

When to Use:

- The first time you start working with Terraform in a new directory.

- Whenever you add or update providers or modules.

- When you clone an existing Terraform repository to a new machine.

terraform plan

Purpose:

This command is used to create an execution plan, showing what changes Terraform will make to your infrastructure. It doesn't make any actual changes but gives you a preview of what Terraform will do.

Key Features:

- Compares the current state of your infrastructure with your configuration files.

- Identifies resources that need to be created, updated, or destroyed.

- Displays a human-readable summary of the changes.

terraform plan

When to Use:

- Before applying changes to ensure that the changes are correct and expected.

- During the planning phase of infrastructure changes to see potential modifications.

terraform apply

Purpose:

This command is used to apply the changes required to reach the desired state of the configuration. It creates, modifies, or destroys infrastructure resources according to the plan.

Key Steps:

- Executes the changes defined in your .tf configuration files.

- Interactively prompts for approval before proceeding (unless auto-approve is used).

terraform apply

When to Use:

- After reviewing the plan and you're ready to implement changes in your infrastructure.

terraform destroy

Purpose:

This command is used to destroy or tear down the infrastructure managed by Terraform. It removes all resources defined in your configuration files and in the Terraform state.

Key Steps:

- It reads the current state and deletes all resources that are being managed.

- Like apply, it prompts for confirmation before destroying resources unless auto-approved.

terraform destroy

When to Use:

- When you want to remove all infrastructure resources managed by Terraform completely.

- For cleaning up after testing or when decommissioning infrastructure.

We now know that IaC makes life easy of a DevOPS, it takes care of everything and also remembers the state of the infra i.e., whether a resource is created or destroyed on the provider platform. But how does it remember the infra and its state?

Well here is an interesting file that we need to look into. terraform state file terraform.tfstate

This file holds all the information about the provider, resource, variable, and output. This file is an important file that does the state management for the resources. The cool part is that we don’t need to create/configure the state file. It is auto-created/managed by Terraform.

- The tf configs contain the code written in HCL. Commands that execute RPC

- Terraform core that has the logic

- The state file

- Interraction of terraform code with AWS CLI

- Creation of resources.

Before diving in further, let's review a few key questions and answers to understand the process better.

Qna’s

Can I create my provider and publish it?

Yes. Terraform is open source. You can create and publish providers to the registry

Can I create and publish modules?

Yes.

Hands-on

Never write coding documentation without a working example

-Aravind

https://github.com/Aravind-psiog/cloudops-terraform-modules - Has a clear readme to set it up and play with it.

The real-world example - WIP

https://github.com/Aravind-psiog/cloudops-terraform-modules/tree/cognito-resource-deployment - Work in progress for a bigger picture

with the above repo. You can set a complete infra as seen above with a single command. This is a WIP for the above real-time use case.